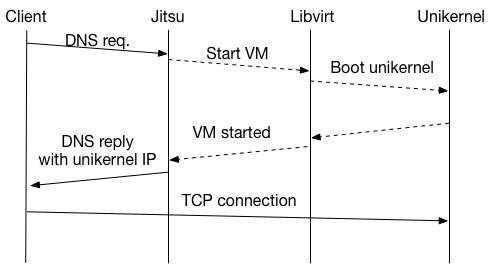

Jitsu - or Just-in-Time Summoning of Unikernels - is a prototype DNS server that can boot virtual machines on demand. When Jitsu receives a DNS query, a virtual machine is booted automatically before the query response is sent back to the client. If the virtual machine is a unikernel, it can boot in milliseconds and be available as soon as the client receives the response. To the client it will look like it was on the whole time.

Jitsu can be used to run microservices that only exist after they have been resolved in DNS - and perhaps in the future can facilitate demand-driven clouds or extreme scaling with a unikernel per URL. Jitsu has also been used to boot unikernels in milliseconds on ARM devices.

A new version of Jitsu was just released and I'll summarize some of the old and new features here. This is the first version that supports both MirageOS and Rumprun unikernels and uses the distributed Irmin database to store state. A full list of changes is available here.